Why 'localhost' Fails in Docker: Exploring Bridge Networks and Solutions

Introduction

In this post, we will discuss an issue related to connecting an application container and a PostgreSQL container via TypeORM using docker-compose. Specifically, the connection fails when the host is set to localhost.

This is a common issue for Docker users, so let’s dive into it.

Let’s begin.

Issue Description

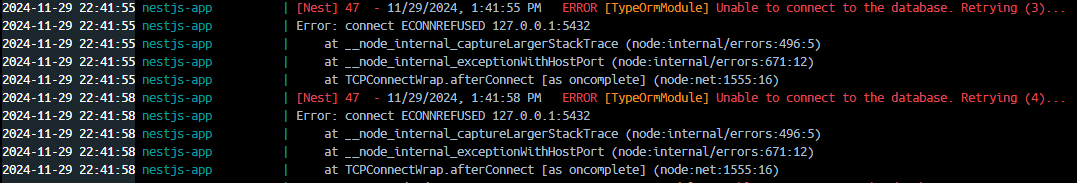

As mentioned in the introduction, we attempted to run an application container and a PostgreSQL container using docker-compose. However, the error shown in the image below occurred:

Why does this happen?

The issue arises because localhost refers to the internal IP (127.0.0.1) of the container itself. This means that instead of pointing to the PostgreSQL container, it refers to the internal network of the application container.

In short, PostgreSQL is not within the network space of the application container, causing the connection to fail.

Solution

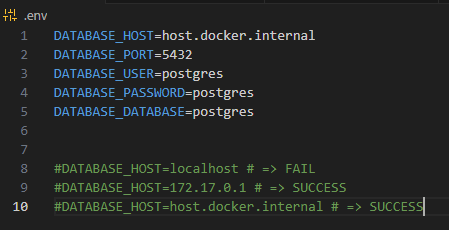

The database host is managed through the .env file. As shown below, we can use either 172.17.0.1 or host.docker.internal as the DB_HOST.

Why do 172.17.0.1 and host.docker.internal work?

To understand this, consider the following flow of a network request sent by a Docker container:

container(eth0) => veth => docker0(bridge) => Host Network Interface (eth0) → External Network

The key component here is docker0 (the bridge). Docker automatically creates a bridge network for containers.

What is a Bridge Network in Docker?

- Container Communication: Containers on the same bridge network can communicate with each other using container names.

- Host Isolation: The bridge network provides network isolation between the host and the containers.

Let’s verify if the bridge is created, whether the containers are connected to the same bridge, and explore the bridge details.

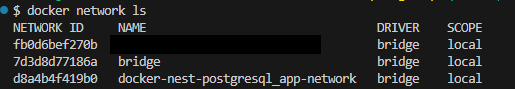

1. Check the Network List

1

docker network ls

As shown above, the created containers are part of the DRIVER(bridge) network.

2. Bridge Network Details

1

docker network inspect bridge

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

[

{

"Name": "bridge",

"Id": "7d3d8d77186a1f2dd28027ac25a178c87d0d432ecab2852b78086f68b6301696",

"Created": "2024-11-29T01:57:46.973940286Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

The Driver is bridge, and the Gateway is 172.17.0.1. This explains why 172.17.0.1 works. The bridge enables communication between containers, and its gateway address is 172.17.0.1.

3. Why does host.docker.internal work?

host.docker.internalis a DNS name provided by Docker to access the host machine.- It maps the host machine’s IP address, allowing containers to reference services running on the host.

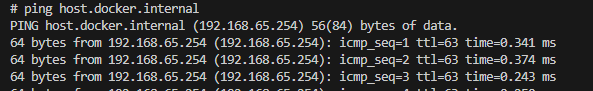

You can test this by pinging host.docker.internal from within a container:

1

2

docker exec -it nestjs-app sh

ping host.docker.internal

It maps to 192.168.65.254, which explains why setting the DB_HOST to 192.168.65.254 also works.

Conclusion

Today, we explored container connectivity in Docker. By diving deeper into this topic, we gained a better understanding of Docker’s structure and communication principles. While there are still areas I need to study further, this is a step in the right direction.

I hope this post was helpful to you.

Thank you for reading, and happy blogging! 🚀